The Force is Strong with This One:

Why ChatGPT Isn’t Your Supervisor (Or Your Therapist)

Note: If you're not familiar with Star Wars, don't worry - each heading uses a quote from the series,

but I’ve also included plain-English translations to keep things clear for you poor souls.

Understanding how AI works helps protect us - and our clients - from the ‘dark side’ of AI

May the 4th may be over, but the Force of ChatGPT is only growing stronger - especially in therapy spaces. As clinicians, understanding how tools like ChatGPT are being used by and around our clients isn't optional anymore. From supervision substitutes to client self-diagnosis, AI is reshaping mental health practice - and not always for the better.

The Risks of Using ChatGPT as a Substitute for Therapy or Supervision

The Allure of Instant Answers

ChatGPT is fast, free, and non-judgemental. That can feel like a godsend when you’re drowning in admin or processing a tricky case. But here’s the thing: it’s not a therapist. It’s not a supervisor. And without context or caution, it can start acting a lot like an echo chamber. And that’s not helping anyone.

We’re starting to see this in practice:

Clients using chat to self-manage anxiety – sometimes effectively, sometimes destructively.

Abusers using AI to “prove” their point (“See!? Even ChatGPT agrees with me!”).

Early-career clinicians turning to free tools for supervision and walking away with what sounds like polished and useful advice, but that may, in effect, be quite risky.

Why Understanding AI Is Now an Ethical Requirement for Clinicians

You Don’t Have to Use It – But You Do Need to Understand It

Whether you’re all-in on AI or still side-eyeing ChatGPT from across the room, our ethical responsibilities have evolved. Here's what clinicians need to be considering - regardless of whether you're actively using AI or not.

If you're using AI tools - as a Padawan, Jedi or Master:

Do you know how the tool was trained? Who built it, and what dataset was it based on?

Is it designed for clinical use, and is it within your scope of practice?

Who sees the data you input? Can you guarantee confidentiality?

Are you covered under your indemnity insurance? Have you reviewed your obligations under AHPRA and the APS Code?

Do you have a process in place to obtain informed consent if AI is used in documentation or intervention?

Are you critically reviewing AI outputs - and do you have a plan for when the tool gives biased, incorrect, or unsafe advice?

Am you actively seeking out professional development to ensure your use of AI is safe, effective, and ethically sound?

Have I used an AI Ethics Checklist or another validation tool?

Available free in the TToTT’s SPARKS Facebook group.

If you're not using AI tools - but want to become a Han Solo type:

Can you confidently explain what ChatGPT is to a client or supervisee?

Do you know how to respond if a client says, “I have ChatGPT now, so I don’t need therapy anymore”?

What if a client’s partner is using chat to gaslight or validate coercive control?

Are you up to date with Core Competency 6 (Digital Literacy)?

Have you considered how AI-based narratives may affect therapeutic alliance or clinical insight?

Are you aware of any personal biases or knowledge gaps that could get in the way of gently exploring a client’s AI use - without shaming or dismissing it?

Can you recognise when AI is reinforcing unhelpful coping strategies - like checking, avoidance, or rumination?

Mini vignette:

Sarah, a new client, tells you she typed her symptoms into ChatGPT, and it suggested she has BPD. She's anxious, tearful, and unsure if you're going to agree. How do you respond with compassion - without handing over your clinical judgement to an algorithm or dismissing her experience out of hand?

It’s not just clients who can fall prey to the lure of the Dark Side of AI.

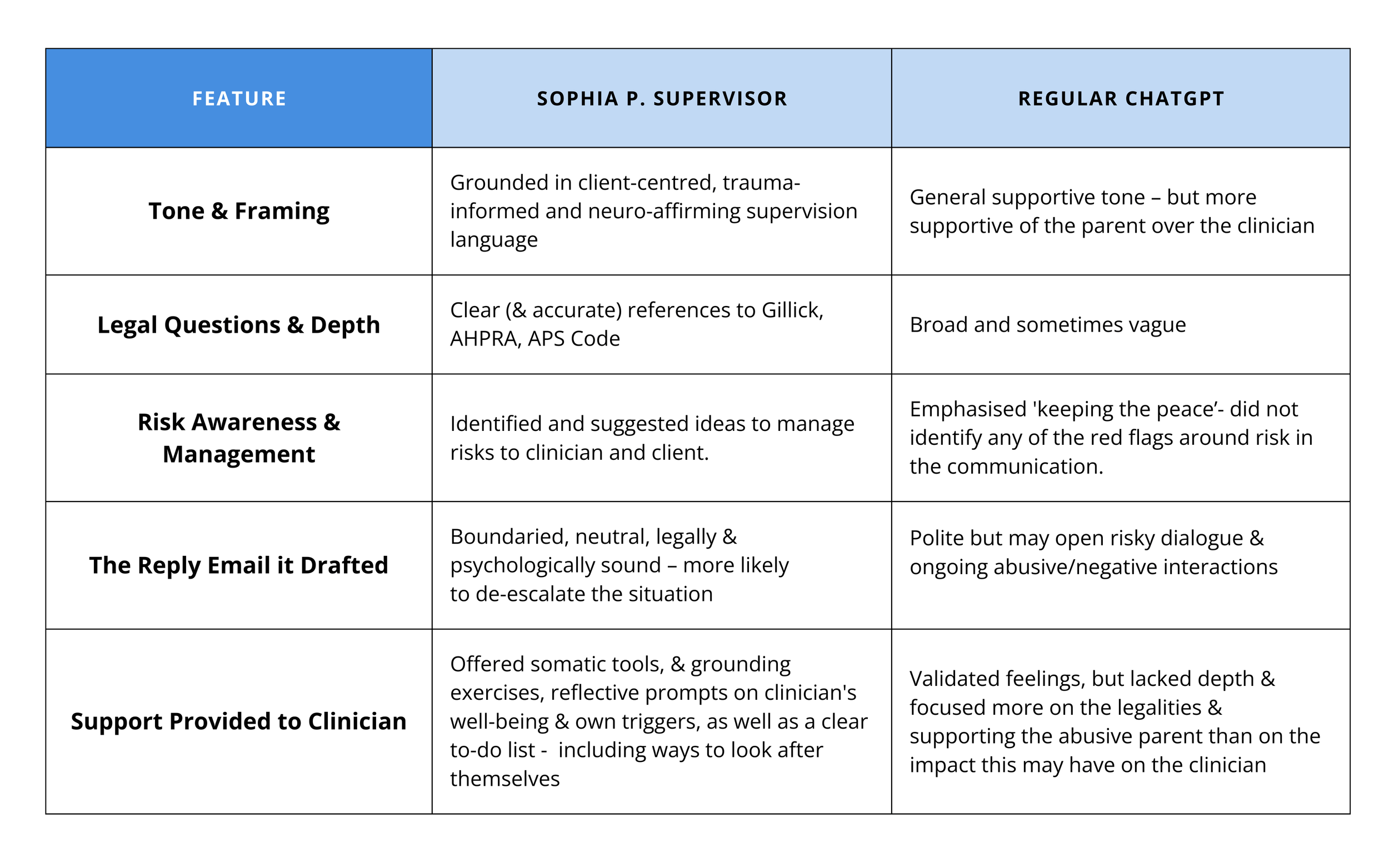

Clinicians, too, are at risk of mistaking smooth, affirming language for sound clinical guidance - especially when under stress or navigating tricky dynamics. That’s why I tested a real-life supervision scenario with two different ChatGPT: once using a generic, free version of ChatGPT, and once using Sophia P. Supervisor, a custom-built chatbot I built and trained on supervisory principles, psychotherapeutic foundations, and our Australian ethical guidelines.

This comparison was designed to show just how different the outcome can be - depending on the tool, how it was trained, who trained it, and what it was built to prioritise. Of course, the quality of your prompt and the user’s experience also matter - but the foundation of the tool itself sets the tone.

The difference? Stark. Read on to see for yourself…

Comparing Regular ChatGPT vs Custom AI: A Supervision Case Study

Here’s a comparison between the responses generated in a free chatgpt account (i.e., a generic, untrained version of chat) and by Sophia P. Supervisor, a custom Chat Bot I built specifically for clinical supervision. The task was responding to a clinician who had received a distressing and threatening email from the estranged father of one of her clients and the prompts I used were identical in each conversation.

Clinicians using Sophia reported feeling steadied, not just placated and more confident in their decisions, not just comforted.

By now, it’s clear that not all chat is created equal - and as healthcare providers, we need to have a handle on that whether we plan to use AI personally or not. Whether you’re a cautious observer or already experimenting with AI in your work, what matters most is how you use it: with intention, ethics, and an understanding of its strengths AND limitations. The tool you choose, how it was trained, and your ability to critically engage with it all shape the safety and usefulness of the outcome - for both you and your clients.

If you're curious to see how this plays out in practice, I've put together a side-by-side comparison using a real supervision scenario. Let’s look a little closer at that demo in the table...

Watch the ChatGPT vs Custom AI Demo and See the Difference for Yourself

Want to see how this plays out in practice?

I’ve created two video demos comparing:

Sophia P. Supervisor vs ChatGPT (responding to a high conflict situation with a client’s parent)

Coming soon: Health Anxiety chatbot vs ChatGPT (for clients)

Both demos — plus the 2-page AI Ethics & Competency Checklist — are waiting for you inside our TToTT’s SPARKS Facebook group for clinicians.

Click HERE to join our FB Group - OR - click HERE to download it directly

Because staying grounded, ethical, and confident in the age of AI?

Your future self (and your clients) will thank you.

Danielle Graber

Clinical Psychologist & Director

12 Points Psychology & Training